Manual checks in Google Search Console waste engineering cycles on fragmented data pulls. Google search console automation via the Google Search Console API Python client turns this into a scripted workflow. It pulls search performance data and indexing diagnostics right into your CI/CD or monitoring stack.

At Nine Lives Development, we integrate these APIs into AI-driven SEO systems. They run headless on schedules. This unlocks technical seo automation without dashboard dependency. Modular code scales across sites. Pretty handy for teams juggling multiple properties.

GSC API Automation: Overcoming OAuth 2.0 Authentication Hurdles

Gsc api automation starts with credentials. OAuth 2.0 trips up most setups. Create a project in Google Cloud Console. Enable the Google Search Console API. Generate OAuth 2.0 credentials, client ID, and client secret file as JSON.

Download the JSON as `client_secret.json`. In production, load it via environment variables. This sidesteps those annoying browser popups.

import os

from google_auth_oauthlib.flow import InstalledAppFlow

CLIENT_SECRET_FILE = os.getenv('GSC_CLIENT_SECRET_PATH', 'client_secret.json')

SCOPES = ['https://www.googleapis.com/auth/webmasters.readonly']

flow = InstalledAppFlow.from_client_secrets_file(CLIENT_SECRET_FILE, SCOPES)

credentials = flow.run_local_server(port=0)Missing consent screen scopes cause common failures. Add `webmasters.readonly` explicitly. Verify your site URL prefix in GSC first. This flow persists tokens in `token.json` for cron jobs, cutting auth overhead in pipelines. Honestly, it is a game-changer for headless runs.

Store secrets in your vault. Never commit JSON files. We deploy this in Flask apps with `/oauth2callback` routes for team access. Data feeds shared SEO dashboards.

Search Console Integrations: Core Endpoints for Engineers

Search console integrations hinge on four endpoints: sites, sitemaps, searchanalytics, and URL inspection. Build a service once. Query repeatedly.

List sites and sitemaps for proactive monitoring.

from googleapiclient.discovery import build

service = build('webmasters', 'v3', credentials=credentials)

sites = service.sites().list().execute()

for site in sites.get('siteEntry', []):

print(site['siteUrl'])

sitemaps = service.sitemaps().list(siteUrl='https://example.com/').execute()Sitemap automation gsc detects submission gaps. `sitemaps.list()` flags 404s or noindex blocks before they cascade. Pipe this into alerts via Slack webhooks in your workflow. Catch issues early, saves headaches later.

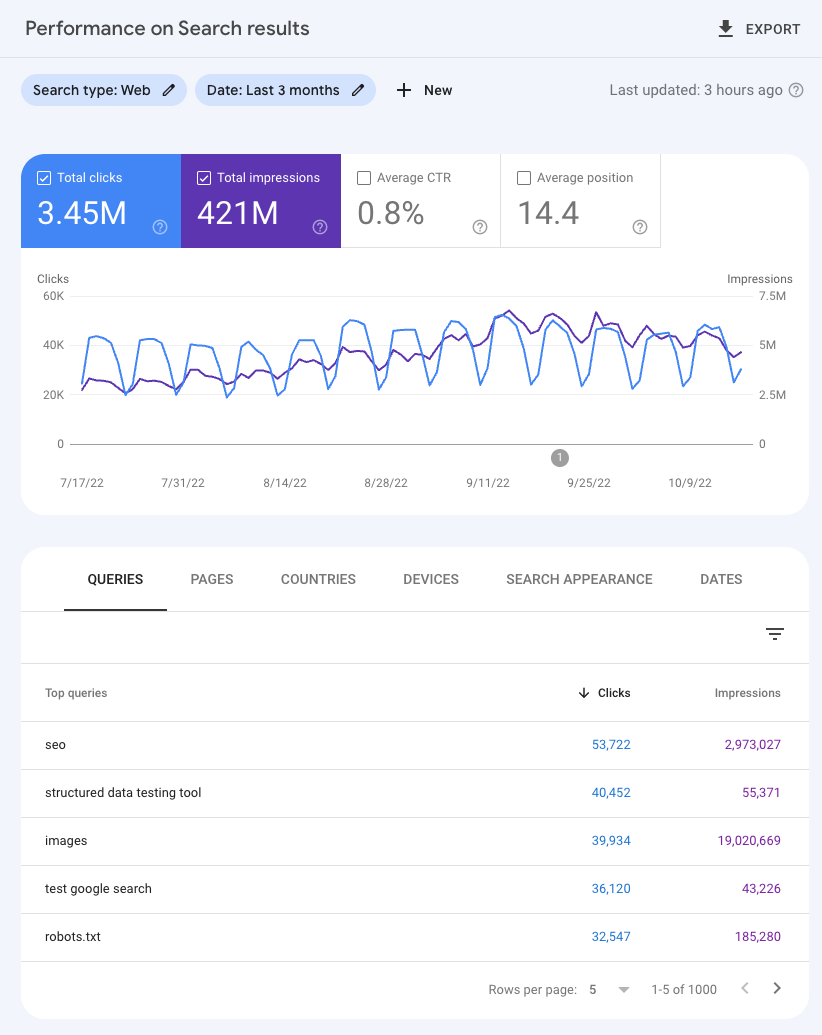

Search analytics pulls clicks, impressions, CTR, position. Use `searchanalytics.query()` with dimension filters for precision.

Technical SEO Automation: Mastering Search Analytics Queries

Technical seo automation shines in search performance data extraction. The `searchanalytics.query` method aggregates by date, page, query, device. It goes far beyond UI limits.

Craft a request body for page-level drops.

body = {

'startDate': '2025-01-01',

'endDate': '2025-01-31',

'dimensions': ['page', 'device'],

'dimensionFilterGroups': [{

'filters': [{

'dimension': 'page',

'operator': 'contains',

'expression': '/blog/'

}]

}],

'rowLimit': 1000

}

response = service.searchanalytics().query(siteUrl='https://example.com/', body=body).execute()

for row in response.get('rows', []):

print(f"Page: {row['keys']}, Impressions: {row['impressions']}")The searchanalytics dimensionFilter surfaces anomalies like mobile CTR cliffs. Aggregates miss these. AggregationType='byPage' groups efficiently for bulk exports. We wrap this in functions that feed Pandas DataFrames, then export to BigQuery programmatically.

Error handling matters. Wrap in try-except for quota hits, 2k rows/day default. Implement retries with exponential backoff. Skip this, and pipelines crumble.

Fix Indexing Issues Search Console: URL Inspection API at Scale

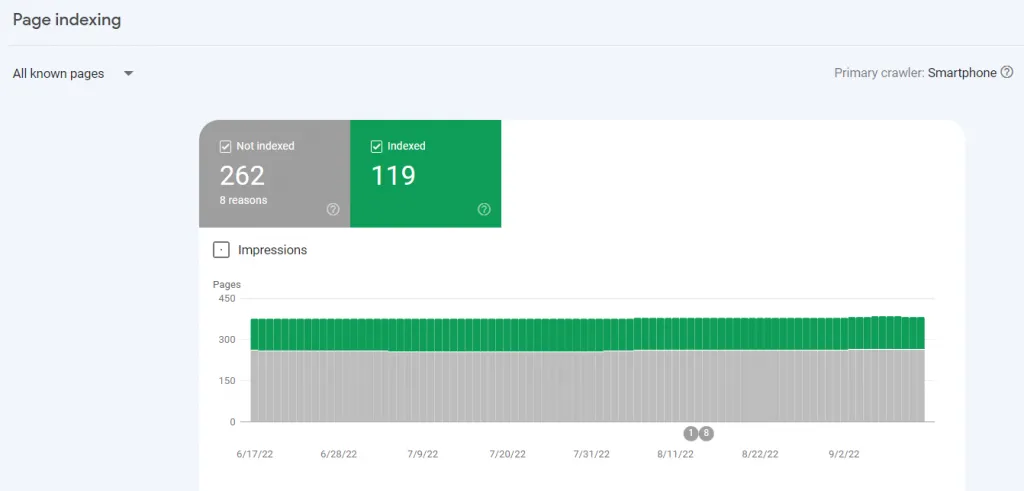

Fix indexing issues search console demands the URL Inspection API. `urlInspection.index.inspect()` returns JSON with indexStatusResult: crawl errors, indexingState, robots.txt blocks, mobile usability.

Bulk audit from a `urls.txt` file.

import pandas as pd

from google.oauth2.service_account import Credentials # For service accounts in prod

def inspect_url(service, site_url, inspection_url):

body = {'inspectionUrl': inspection_url, 'siteUrl': site_url}

return service.urlInspection().index().inspect(body=body).execute()

results = []

with open('urls.txt') as f:

for url in f.read().splitlines():

data = inspect_url(service, 'https://example.com/', url)

inspection = data.get('inspectionResult', {}).get('indexStatusResult', {})

results.append({

'URL': url,

'Indexing State': inspection.get('indexingState'),

'Coverage State': inspection.get('coverageState'),

'Last Crawl Time': inspection.get('lastCrawlTime')

})

df = pd.DataFrame(results)

df.to_csv('indexing_audit.csv', index=False)This scales to thousands of URLs. Export to CSV for analysis. Integrate with GitHub Actions. Run on deploy, flag noindex pages pre-prod. Service accounts bypass interactive auth for CI. Scales like a dream.

Building Production Pipelines: Modular Scripts and Scheduling

Google search console api python wrappers using `google-api-python-client` make reusability straightforward. Structure as importable modules.

# gsc_client.py

def get_gsc_service():

if not os.path.exists('token.json'):

flow = InstalledAppFlow.from_client_secrets_file(CLIENT_SECRET_FILE, SCOPES)

credentials = flow.run_local_server()

else:

credentials = Credentials.from_authorized_user_file('token.json', SCOPES)

return build('webmasters', 'v3', credentials=credentials)

if __name__ == "__main__":

service = get_gsc_service()

# Run queries hereDeploy via cron or Airflow DAGs. Fetch daily, store in Postgres. Trigger alerts on impression drops >20%. At Nine Lives, we chain this to AI workflows that suggest meta fixes from inspection data.

Build error resilience. Log quota errors, fallback to cached data. Flask endpoints expose `/metrics` for Grafana SEO dashboards.

Real-World Wins: Indexing Fixes and Custom Dashboards

Pull search performance data weekly. Filter by query drops.

dimension_filter = {

'group': {

'filters': [{

'dimension': 'query',

'operator': 'notRegex',

'expression': 'brand term'

}]

}

}This isolates organic signals. One pipeline auto-generates reports. URL Inspection flags blocked pages. Search Analytics correlates to traffic loss. Sitemaps verifies fixes post-submit.

Tie to search console integrations like Metabase for live views. Engineers reclaim hours. Focus shifts to systems that self-heal indexing. True automation beats manual tweaks every time.

Scaling GSC Automation: Filters, Integrations, and Next Steps

Advanced dimensionFilter combos (page + device + country) drill into geo-specific issues. Export to SEO dashboards via JSON endpoints.

Hook into Zapier or Stackby for no-code extensions. Stick to Python for control. Read-only scopes keep it safe, no accidental overwrites.

Google search console automation transforms reactive SEO into engineered reliability. Modular pipelines with Python google-api-python-client deliver operational edge. Build once, run forever.

GSC API automation replaces manual dashboard checks with scripted workflows. OAuth 2.0 setup is the hardest part - after that, searchanalytics and URL Inspection APIs unlock powerful programmatic access. Build modular Python clients, schedule with cron or Airflow, and pipe data into your monitoring stack.

- OAuth 2.0 credentials and proper scopes are the foundation

- searchanalytics.query() extracts performance data beyond UI limits

- URL Inspection API enables bulk indexing audits at scale

- Modular Python wrappers make pipelines reusable across properties

Need help building automated SEO pipelines or AI-driven content systems? Check out our AI services or get in touch to discuss your technical SEO automation needs.